The metaverse, a virtual world in which people can interact, work and play, has become much more important in recent years. With the increasing integration of technologies such as virtual reality (VR) and augmented reality (AR), the metaverse is becoming an ever more realistic and tangible part of our lives. But how does this digital transformation affect our ability to empathize? In this blog post, I explore the role of empathy in the metaverse and how it is shaping our interpersonal relationships in this new digital era.

The importance of empathy in the digital space

Empathy, the ability to understand and empathize with the feelings and perspectives of others, is a fundamental part of human interaction. In physical space, empathy is supported by non-verbal cues such as facial expressions, gestures and tone of voice. But how can empathy be maintained in a virtual environment where these cues are often absent?

Recent studies show that the metaverse has the potential to promote empathy in new ways. Through immersive experiences, users can immerse themselves in the perspectives of others and experience their experiences up close. One example of this is the use of VR to simulate the lives of people in crisis areas. Such experiences can evoke profound emotional responses and increase understanding and empathy for those affected¹.

Empathy as the key to brand engagement

Empathy also plays a crucial role in brand engagement in the metaverse. Companies that manage to build empathetic connections with their customers can create deeper engagement and loyalty. Willem Haen from Frontify emphasizes that empathy, emotion and inclusion are the three key components for successful brand engagement in the metaverse². By creating authentic and emotional experiences, brands can not only increase customer loyalty, but also employee loyalty.

Challenges and opportunities

Despite the promising opportunities, there are also challenges. The anonymity and distance in the metaverse can lead to a lack of responsibility and empathy. It is therefore important to develop mechanisms that promote positive interactions and minimize negative behaviors.

Another aspect is the so-called digital gap. Not everyone has access to the necessary technologies to participate in the metaverse. This can lead to a further division in society. To counteract this, measures must be taken to expand access to these technologies and make them more inclusive.

Examples of empathy in the metaverse

1. virtual therapy and support: In the Metaverse, virtual therapy sessions can be offered where therapists and clients interact in a safe, anonymous environment. This can be particularly helpful for people who feel uncomfortable in traditional therapy settings³.

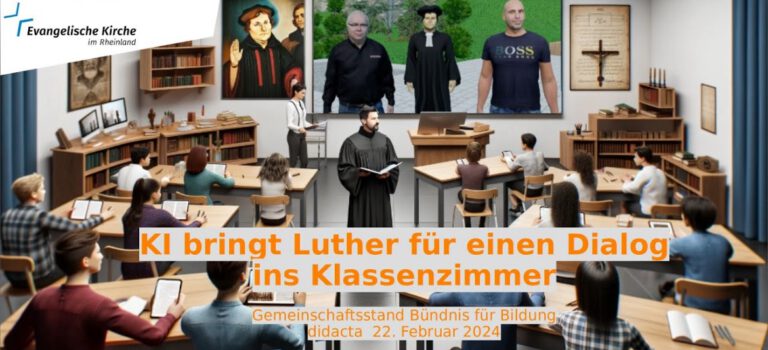

2. educational programs: Schools and universities use the metaverse to teach empathy to pupils and students through immersive experiences. For example, students can take on the role of historical figures or experience life in different cultures to develop a deeper understanding and empathy⁴.

3. virtual memorials: memorials in the metaverse allow people to mourn together and share memories, regardless of their physical location. These virtual spaces provide a platform for collective remembrance and support⁴.

4. inclusion initiatives: Companies and organizations can develop programs in the Metaverse that are tailored to the needs of people with disabilities. This can be done through accessible virtual environments and special avatars that enable everyone to participate on an equal footing³.

5. virtual volunteering: People can participate in volunteering projects in the Metaverse, such as virtual mentoring programs for young people or support for non-profit organizations. These activities promote empathy and social engagement by giving people the opportunity to help others⁴.

Conclusion

The Metaverse offers a unique opportunity to foster empathy in new and innovative ways. Through immersive experiences and empathetic brand engagement, deeper and more meaningful connections can be created. At the same time, we need to be aware of the challenges and actively work to create an inclusive and responsible digital world.

Empathy in the metaverse is not just a question of technology, but also of human values and relationships. By integrating these values into the digital world, we can create a future where the metaverse is not just a place of entertainment, but also of understanding and empathy.

¹: Fraunhofer ISI. "Will we all soon be living in the metaverse?" Accessed on June 18, 2024.

²: t3n. "With 3 E's to brand engagement in the metaverse: Empathy, Emotion and Inclusion." Accessed on June 18, 2024.

³: Deloitte. "Virtual worlds, real emotions: Customer experiences in the metaverse." Accessed June 18, 2024.

⁴: Droste, Andreas. *New Work in the metaverse: Experiences, application examples and potentials from three years of metaverse business practice*. tredition, 2023.